Introduction

The popular vision of open source software development is one of informal, even chaotic collaboration, among hackers (not crackers), to piece together applications, tools and infrastructure, raising questions of quality, stability and repeatability. Nothing could be further from the truth. Open source projects, especially the larger ones, are very disciplined, following best practices for software engineering and community development. Moreover, rather than forming communities comprised of lone hackers, today's open source projects leverage development under the auspices of major organizations, including tech companies, banks, insurance companies and even branches of government.

It is true that the practices of open source communities can vary greatly, as can the deliverables, but so can proprietary software. So how can users and integrators of open source determine the status, stability and stature of projects under consideration?

This blog lays out example metrics for open source communities and the code they deliver. The KPIs - Key Performance Indicators - include both object/quantifiable metrics, but also more intangible ones.

Activity

The most salient indices describe measurable movement within a community and/or code base. These indices include

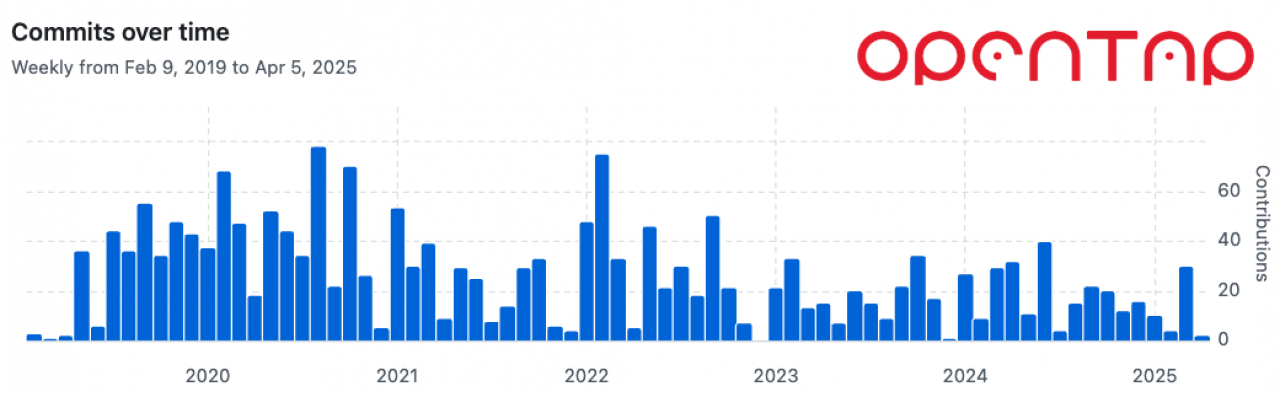

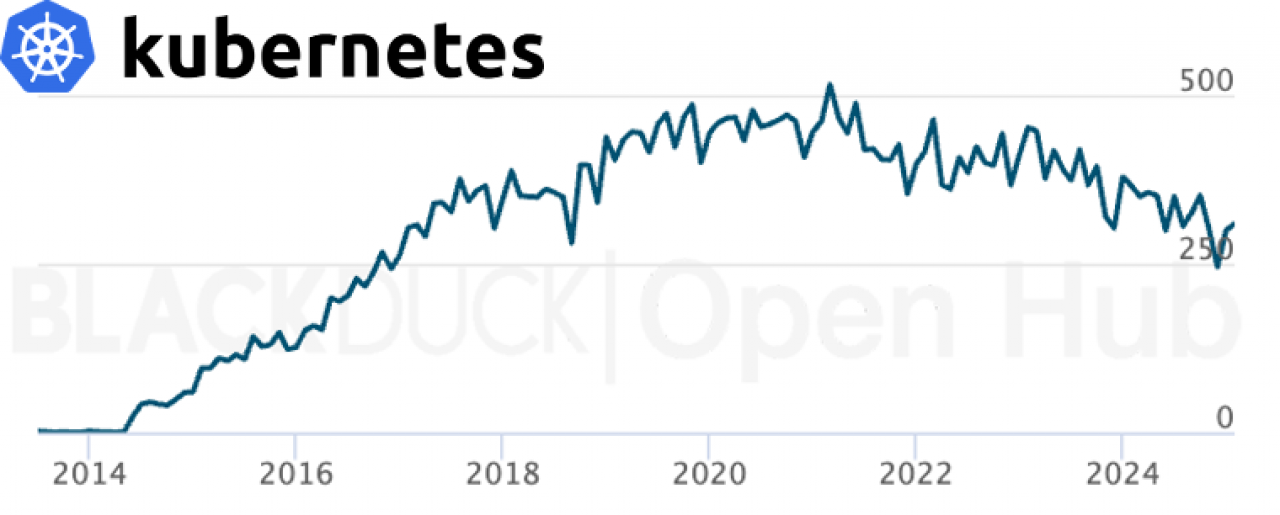

Pull Requests and Commits

Bug reports and feature requests

Messages on a mailing list or forum

Size Measurements

Community Size

Developer communities grow as a project takes on increasingly business-critical roles across multiple organizations.

End-user community size is very difficult to measure. Project sites sometimes track download numbers, but there is almost no way (excepting embedded telemetry) to know how many of those downloads result in actual usage and if that usage is by one or many users. A more reliable subset, if indirect measurement, would be based on the number of user subscription / seats being sold by third-parties productizing project software.

Code Size

Code size and composition are indicative of the level of contribution to a project, but the salience of KLoC (thousands of lines of code) varies by project maturity and technology.

Early in a project's lifespan, growth is usually slow, as relatively fewer developers make seminal contributions to reach minimal viable functionality. However, when a project is born from a substantial contribution of existing code, its starting size will of course be more substantial. The same is true for whole subsystem contributions later in the lifecycle.

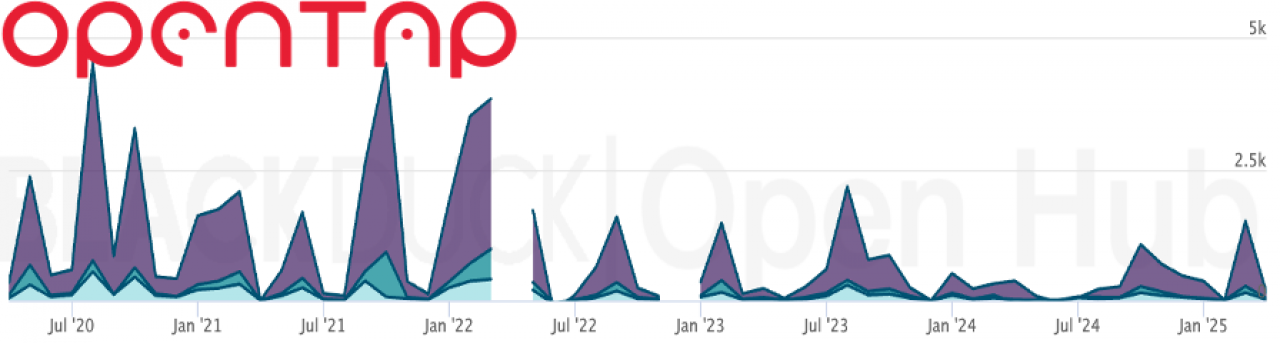

Platform-type projects, e.g., OpenTAP or operating system kernels, will usually undergo incremental growth as contributors expand the corpus of plugins, device drivers and other interfaces.

Performance

Performance constitutes several different types of KPI:

Code Benchmarks - for code with measurable run-time attributes, both point-in-time and historical performance values prove to be interesting KPIs. When the author worked at an embedded Linux startup, he would publish metrics for interrupt and preemption latency in the Linux kernel and system size KPIs. For open source compilers, compilation rates (LoC/minute) and run-time benchmarks on different types hardware.

Project Performance - How well does an open source project handle its workload? KPIs include Backlog and ticket history, ratio of New:Closed tickets.

Project Growth - New features and patches accepted.

Community Leadership - how significant is an organization in the governance of a project? KPIs include roles held, board and TSC seats.

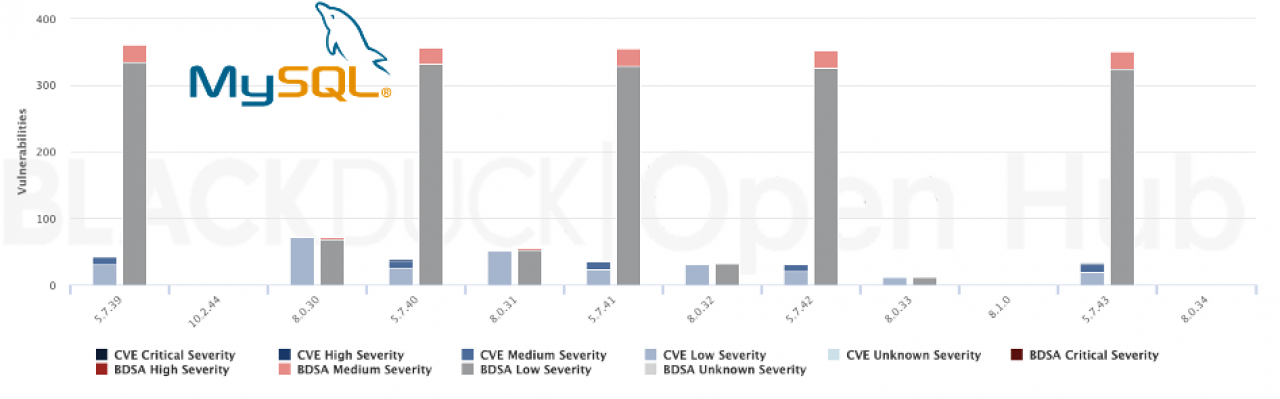

Security

Holistic measures of security are difficult to derive, but a very useful index measures the number of known vulnerabilities in a project's code base and their criticality, usually measured in terms of number and types of CVEs.

Other Contributions

Not all activities and contributions are direct and visible, but all types can be measured

Events - number of events, cost per event, attendees, press mentions

Sponsorships and Bounties - number of devs sponsored, monetary value, open bounties

Donations - number and value by third parties to the project or project foundation

Marketing - size of marketing team (if any), budget (if any), press mentions

Productization - number of companies integrating project code, number of products, value of those products

Tools

Obtaining metrics for projects of interest has become quite easy. Tools abound for exploring the indices outlined in this blog, including

Tooling in GitHub, GitLab and other repositories

Third-party tools from Gource, Black Duck, SCANOSS and a dozen other SCA vendors

Integratable dashboard components from Bitergia and others

These tools provide an objective view of open source project KPIs. A more subjective but also important view of project viability comes from the opinions of peer developers expressed in newsgroups and other review venues.

KPI Considerations

As with any type of metadata, context is key to understanding the meaning underlying KPIs.

Context

Independence - Freestanding projects vs. those in close orbit around the founding entity (usually a company or academic institution).

Maturity - When was the project founded? How does it score under disciplines like the SEI Capability Maturity Model?

Environment - Organizational vs. project success, that is, an open source project could benefit its founding organization greatly while having limited impact on a larger ecosystem. that is, the project never achieves "escape velocity" and is really still an inner source project or a code dump.

"Gaming" KPIs

Sneaky contributors sometimes "game" KPIs to amplify the number of contributions. Developers at a global consumer electronics company infamously broke their patches and other contributions into smaller pieces, to meet both internal quotas for project participation and to juice the external reputation of the company.

Breaking contributions into smaller bites is not always devious: maintainers are more likely to accept more succinct and digestible patches. A different organization complained to the author that their patches to the Linux kernel were meeting with rejection; it turned out that those contributions were, on average, 3X larger than the project norm.

Conclusion

This blog has laid out a range of readily-available KPIs for use in real-world comparisons across projects and decision-making around adoption and integration. These KPIs and others provide an objective basis for open source project rating and ranking that complement but don't definitively substitute for opinions voiced by developers and shared across forums. But they do remind us to "do our homework" before committing to a long-term relationship with project and its code base.