Artificial Intelligence (AI) and Machine Learning (ML) are bringing new functionality to applications across information technology. Projects like ChatGPT that employ large language models, and various image creation engines garner popular attention, but AI and ML have potential to enhance test and test automation in myriad ways.

This blog explores how AI and ML can enhance test and test automation.

AI and ML – what’s the difference?

AI and ML are closely related but not quite the same thing.

Google Cloud defines the two concepts as

AI is the broader concept of enabling a machine or system to sense, reason, act, or adapt like a human

ML is an application of AI that allows machines to extract knowledge from data and learn from it autonomously

Machine learning and artificial intelligence are both umbrella categories. AI is the overarching term that covers a variety of approaches and algorithms. ML sits under that umbrella, along with other subfields, such as deep learning, robotics, expert systems, and natural language processing.

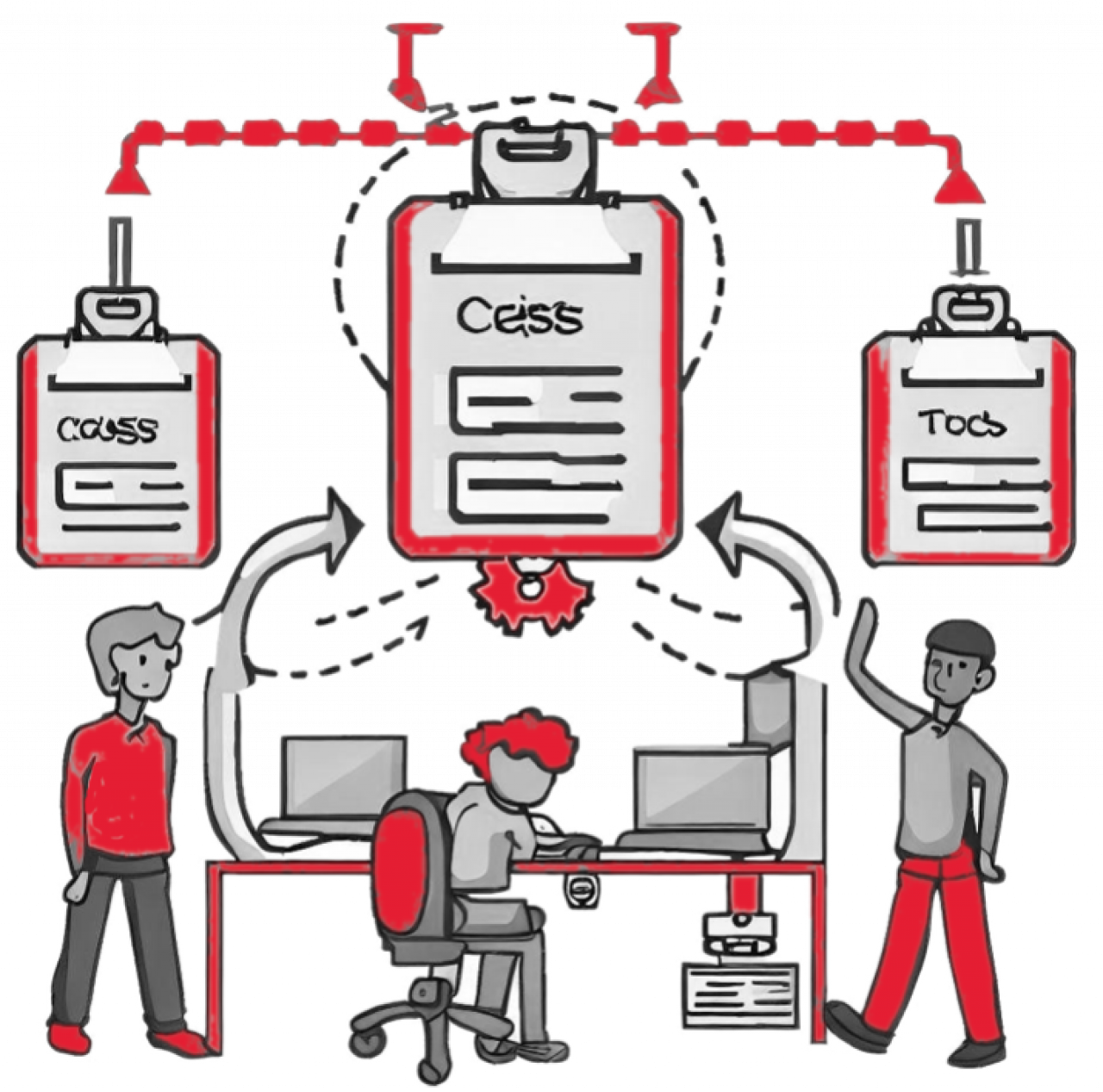

AI and ML in Test Automation

AI has the potential to improve software testing and test automation by augmenting human capabilities, enhancing efficiency, and enabling more robust test coverage. ML is key for observing working test systems and finding ways to optimize their operation.

There are multiple ways to apply AI to improve software testing:

Test Case Generation: AI, using machine learning, can automatically generate test cases based on input-output mappings, code coverage analysis, or inferred program behavior. AI can explore vast search spaces to identify edge cases and generate diverse test inputs, improving overall coverage.

Test Prioritization and Optimization: AI can analyze codebases, historical test results, and user feedback to prioritize test cases based on impact and likelihood of failure. By intelligently ordering and optimizing test execution, AI can reduce testing time and resource requirements while maximizing the detection of critical defects.

Test Oracles: AI can assist in creating accurate and reliable test oracles, to determine whether the observed output of a system under test is correct. By learning from large datasets, AI can help identify expected outputs and validate the correctness of test results.

Defect Prediction and Regression Testing: AI can analyze historical data, code changes, and bug reports to predict areas most likely to contain defects. Such information can guide prioritization of regression testing, to focus on the most vulnerable codebase sections, saving time and effort while maintaining test coverage.

Log Analysis and Debugging: AI can analyze log files, stack traces, and error messages, helping testers and developers identify root causes of failures more efficiently. AI can assist in categorizing discovered issues, suggesting potential fixes, and reducing time spent on manual debugging.

Test Maintenance: AI can monitor codebase changes, track dependencies, and update test cases and test data as software evolves. By understanding the impact of changes on the testing artifacts, AI can help maintain test suites, for greater relevance and accuracy.

Security Testing: AI can assist in automating security testing by identifying and cataloguing vulnerabilities, performing pen testing, and analyzing code and system behavior for security risks. AI can learn from known vulnerabilities, threat intelligence, and security patterns to enhance the effectiveness of security testing processes.

While AI can enhance software testing and test automation, it’s best employed as a complement to human expertise. Human testers and developers play a crucial role in designing, validating, and interpreting the results of AI-powered testing tools to ensure the quality and reliability of software systems.

Applying AI to Test Case Generation

Some of the applications for AI listed above might seem exotic or farfetched. By contrast, test case generation is very concrete and practical.

Following are several examples of AI being used to generate software test cases:

Search-Based Software Testing: Search-based techniques, such as genetic algorithms or evolutionary algorithms, can be employed to generate test cases automatically. These algorithms explore the search space of possible inputs and optimize test coverage based on select criteria.

Model-Based Testing involves creating a model of the system under test and generating test cases from that model. AI techniques, such as symbolic execution or constraint solving, can be used to automatically analyze the model and generate test inputs that exercise different paths, conditions, or states of the system.

Fuzz Testing, also known as fuzzing, is where the system under test is bombarded with volumes of invalid, unexpected, or random inputs. AI can be applied to intelligently generate diverse and targeted fuzz inputs, maximizing the chances of triggering vulnerabilities or uncovering unexpected behavior.

Machine Learning-Based Testing: ML algorithms can be trained to learn patterns from existing test cases and generate new ones based on them. By analyzing input-output mappings, code patterns, or existing test suites, machine learning can generate test inputs or transformations that mimic the characteristics of valid and faulty inputs.

Property-Based Testing focuses on specifying properties or invariants, and then automatically generating test cases to validate those properties. AI can assist in generating complex test inputs that satisfy the specified properties, verifying system behavior against desired properties.

Reinforcement Learning algorithms can train agents that learn to interact with the system under test and generate test cases. By rewarding the agent for discovering failures or triggering specific behaviors, reinforcement learning can explore the system and generate relevant test inputs.

Conclusion

These examples demonstrate how AI techniques can be leveraged to automatically generate test cases, augmenting traditional testing approaches and improving test coverage. It's important to note that AI-generated test cases should be complemented with human expertise and validation to ensure relevance and accuracy.

A future blog will examine how AI/ML systems are themselves tested and opportunities for streamlining that testing with test automation.