Every year for the last four, Keysight has collaborated with faculty at the University of California Santa Cruz (UCSC) Baskin Engineering School to sponsor senior projects in test automation. This year, one of those projects focused on leveraging LLMs (Large Language Models) to streamline the creation of OpenTAP plugins in Python.

Approach

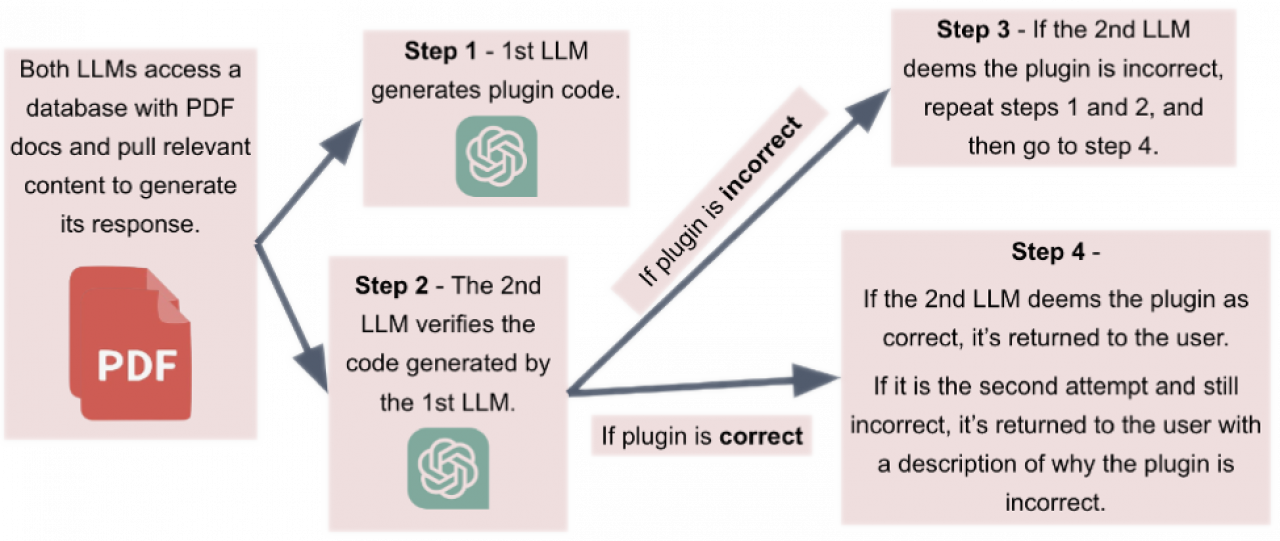

The program employs two LLMs (Large Language Models) to access a database of OpenTAP documentation files (PDFs) pull relevant content for generating plugin code. One LLM generates the plugin codeand the second LLM verifies the code produced by the first. If the second LLM determines the plugin is incorrectly implemented, the process repeats, with the first LLM generating new code and the second LLM verifying the code in the new attempt. If the second LLM deems the plugin correct on the first or second attempt, the system returns the derived code to the user for testing and use. Conversely, If after the second attempt, the second LLM is still not satisfied with the correctness of the plugin, it is returned to the user with an explanation of the disposition and the detected errors highlighted.

Challenges

Prompt Engineering

One of the major challenges the team faced was prompt engineering. Crafting prompts for the LLM that consistently yielded accurate results was particularly challenging due to the diverse range of instruments, device categories, interfaces, and SCPI commands available. Additionally, since an LLM is not deterministic, it often produced different results even with identical prompts, further complicating the task of achieving reliable outcomes.

Data Gathering

The plugin generation process, utilizing a RAG (Retrieval-Augmented Generation) approach, relied heavily on SCPI documentation and device manuals. However, many of the commands were effective for only a few devices, necessitating extensive searches and the uploading of a large amount of additional documentation. Additionally, the RAG approach depended on availability of sample plugins from Keysight. While C# plugins were extensively available from Keysight, finding instrument documentation related to Python proved to be much more challenging.

Automated Testing

The student team had to account for a wide range of tests and use case scenarios to ensure the sufficient functionality of the generated plugins. A rigorous testing process involved multiple steps:

Verifying that the plugin used the correct imports and functions.

Ensuring that the generated code adhered to Keysight plugin syntax.

Analysis by a second LLM to identify any needed modifications.

Compiling the generated plugin on for OpenTAP, ensuring compatibility with the test automation platform

Updating Technologies: We frequently had to adapt to new versions of technologies. Azure, for example, regularly updates its services and libraries, e.g., changes in chat completion versions or deprecation of certain programs, requiring migration to newer versions.

Project Goals

Reducing Development Time for OpenTAP Plugins

A streamlined approach significantly reduced the development time required for creating OpenTAP plugins. Advanced methodologies and technologies optimize the plugin creation process, allowing teams to expedite plugin development while maintaining high standards of quality and reliability.

Automated Testing for Quality Assurance:

To uphold a commitment to quality, each generated plugin undergoes automated verification. This automated testing framework ensures that all plugins meet stringent quality standards before deployment. Automating this crucial aspect of the development process can guarantee that plugins consistently deliver optimal performance and reliability, enhancing the overall user experience and satisfaction.

Results

Plugin Generation: Our cutting-edge system excels at generating plugins tailored for a wide range of Keysight tools, and it takes approximately 2 minutes to generate a plugin. Typically, creating a plugin can take between 20 to 48 hours. By reducing this to just 2 minutes, we achieve a remarkable decrease in development time by approximately ~75%, allowing test engineers to focus more on innovation and less on routine plugin development. This streamlined approach is a game-changer, minimizing the time spent on plugin creation from hours to mere minutes, thus enhancing productivity and efficiency dramatically. (See graph below for the time to create plugins for various devices.)

Streamlined Verification: Prior to deployment, each plugin undergoes rigorous verification to uphold stringent quality standards. This meticulous process ensures that every plugin is fully functional, including successful compilation and seamless connectivity to instruments. By adhering to these standards, we guarantee that only top-tier plugins are delivered to our users, enhancing their experience and productivity.

Compatibility Assurance: Our plugins are meticulously crafted to seamlessly integrate with various Keysight software applications without the need for additional modifications. This commitment to compatibility assurance alleviates compatibility concerns, enabling users to leverage their Keysight tools with confidence and efficiency.

Project Team Members

The project team members were seniors (and one junior) at UCSC studying computer engineering.

Click on the student images to view their Linked-In profiles.

Project Artifacts

Feel free to download the project code and learn more about the project from the project presentation, the project poster and a demo video prepared by the students.

Click on the icons to visit the repo or download the artifacts.

Acknowledgments

The student team would like to thank for following Keysight staffers for their support: